After an initial question by my friend and fellow MVP Koen Verbeeck, myself and a bunch of people started answering, amongst others was Mohammad Ali, Group Program Manager for Power BI.

If a #MicrosoftFabric capacity is not running, you cannot use the #PowerBI reports? cc @BenniDeJagere @cwebb_bi

— Koen Verbeeck 🇺🇦 🌈 (@Ko_Ver) September 27, 2023

After a while it got me thinking:

- What does it actually mean when I pause a Fabric capacity?

- What will stop working?

- What can I still do and won't stop working?

Important considerations

Microsoft Fabric is a prerelease online service that is currently in public preview and may be substantially modified before it's released. Preview online service products and features aren't complete but are made available on a preview basis so that customers can get early access and provide feedback.

A note before you start and might be aware of, Microsoft Fabric is still in preview, so be aware of the available functionality, availability and supportability, which is described in detail here.

TL;DR

After playing around and testing various scenario's, I was quite surprised on a few answers I got, so keep reading if you want to find out!

In case you are not interested in the setup, you can also skip right to my tests or the conclusions.

Start setup

The steps I took to start exploring the capacity capabilities are the following:

- I created a Fabric capacity in the Azure Portal for my tenant. You can even start an Azure (30 day) free trial and use that to create a Fabric capacity. Erwin did a great job explaing how to create a Fabric capacity, so I won't go into details here.

- Then I set up a basic Lakehouse from the Lakehouse tutorial on Microsoft Learn. I followed the tutorial up untill step 3 (Build a lakehouse), where I end up with a dataflow Gen2, a lakehouse and a Power BI (Direct Lake) report on the default dataset.

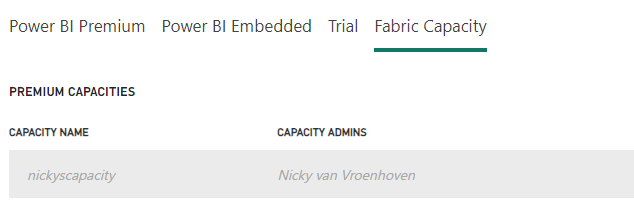

- I also created 2 workspaces:

- Test Fabric Capacity holds all my Fabric

artifactsitems and has the Fabric capacity (nickyscapacity, see below) assigned. This is the workspace I used for my tutorial. Let's call this the Fabric workspace. - Test Fabric semantic model has no capacity assigned, so it's a regular (pro) workspace. Let's call this workspace.

- After that, I've created a few datasets/reports (or semantic models if you will 😀) (with Direct Lake, DirectQuery and Import) on top of the SQL Endpoint of my lakehouse.

The basic report I created, it's not really important how it looks for now:

My tests

The first thing is of course pausing my capacity, which is an easy push of a button in the Azure portal.

Next I want to see what happens when I access certain items or take certain actions in my workspaces.

Here's a list of things I tried to do:

- Access dataflow Gen2

- Access the Lakehouse

- Access SQL Endpoint (of the Lakehouse)

- Access Direct Lake model from Fabric workspace

- Access Direct Lake model from workspace

- Access DQ model from Fabric workspace

- Access DQ dataset from workspace

- Access Import dataset from Fabric workspace

- Access Import dataset from workspace

- Download import dataset and re-publish to workspace

- Refresh Import dataset from workspace

- Move the Fabric workspace to Pro

- Access the Fabric Capacity Metrics app

- Edit capacity settings

Access my dataflow Gen2

Not a very helpful error message 😀

Access the Lakehouse

This is very helpful, it actually mentions my capacity (ID) is not running.

Access SQL Endpoint (of the Lakehouse)

Not very helpful, it doesn't say anything about my capacity.

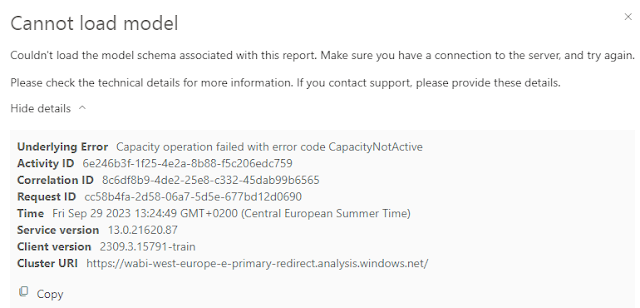

Access Direct Lake model from Fabric workspace

This one IS helpful, it actually mentions my capacity is not active, although it doesn't give the ID like with accessing the lakehouse.

Access Direct Lake model from workspace

I created a copy of the report into the regular workspace and opened the report.

Strange enough, I suspect because of some caching that was already done before pausing the capacity, some parts of the report still work. I assume that cache is then copied over (with the report) to the regular workspace.

Some interactions worked, but when I clicked a filter without any cache, I got the same error message as below with the DQ model.

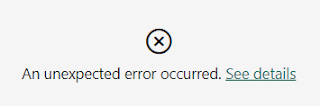

Access DQ model from Fabric workspace

The visual itself gives me the above error, see the detailed error message below: not very helpful.

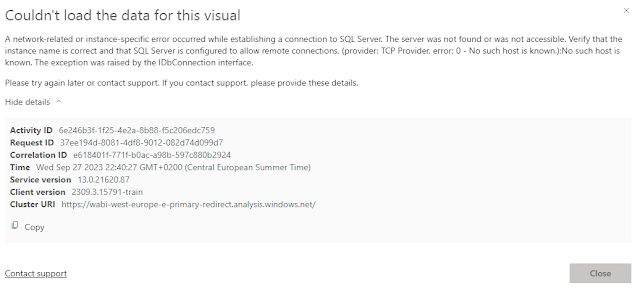

Access DQ dataset from workspace

This one is a bit inconsistent because I got different errors on this action.

I'm accessing the DQ report in the other, regular workspace. I'm getting a slightly different error in the visual, but the detailed error message is totally different then from the Fabric workspace. It's more a SQL server error message.

It at least tells me there's something wrong with the SQL endpoint.

But when I tried this same action later, I got the following error, which is very much helpful because it mentions the CapacityNotActive.

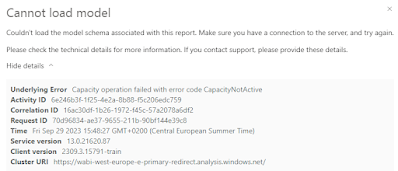

Access Import dataset from Fabric workspace

However, this one got me a bit surprised, because I'm accessing an imported model, so the data is no longer in OneLake. But as we'll see a bit further, nothing from a Fabric workspace can be accessed anymore when the capacity is paused.

Access Import dataset from workspace ✅

The difference with the action above is that this is the regular workspace. This one succeeds, because the data is in the imported model in the regular workspace, which is active and running. It has nothing to do with the Fabric capacity.

Download import dataset and re-publish to workspace ✅

Surprisingly (to me), I can still download the dataset from the Fabric workspace. So it seems the dataset itself is not stored in OneLake, since that is paused. Still a bit strange why then the import model doesn't start from this Fabric workspace.

Refresh Import dataset from workspace

This action pertains to refreshing the Import dataset from the regular workspace, the dataset which I could open. However, the refresh action itself fails, because it needs the lakehouse data to refresh, which is not available.

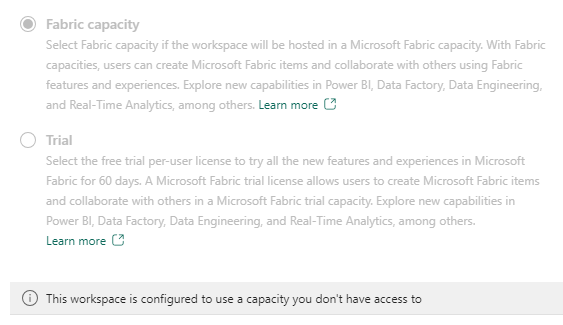

Move the Fabric workspace to Pro

When trying to move the Fabric workspace to a regular (Pro) workspace, you might be thrown off by this message in the workspace Premium settings in the bottom:

This is also mentioned as one of the current restrictions.

I would urge you to carefully read those restrictions, the known issue(s) and final way of working when the known issue is resolved, especially if you plan to move items between regions after a workspace has been created.

Access the Fabric Capacity Metrics App ✅

The Fabric Capacity Metrics app just keeps functioning. It doesn’t need the capacity itself to operate on, it uses the analytics/telemetry from the capacity that is logged and reports on that.

Editing capacity settings

The capacity settings in the Fabric Admin portal are italic and cannot be edited, unless you resume the capacity.

Conclusion

So to conclude: all items in a Fabric workspace become unavailable (for interactive opening) when a capacity is paused. Also Power BI-only items.

You can still download an import dataset from the workspace. You can also export the .json file of a dataflow (gen 1 and gen 2). But that's about it you can do on a workspace with a paused Fabric capacity.

|

| 1: Depending on the way you created the report, it might still have some cache, so it might work partially |

Thanks to Štěpán Rešl for pointing out the Usage Metrics report.

So for now, it's best to separate the two, Fabric and non-Fabric items, in separate workspaces so you can always access the Power BI only items when the capacity is paused. In case you are not running your own capacity, but a free trial capacity, you don't have to take this into account for now.

I hope this overview was usefull to you, I can at least use it as a reference and lookup post :-)

I am sure a couple of things will change in the near future, as Fabric updates keep coming out regularly.

If you are missing something from this overview let me know in the comments and I can see if I can add it here.

But why does imported models do not work if the capacity is paused?

ReplyDelete